It’s an ideal that’s only achievable when you’re able to set your own priorities.

Managers and executives generally don’t give two shits about yak shaving.

It’s an ideal that’s only achievable when you’re able to set your own priorities.

Managers and executives generally don’t give two shits about yak shaving.

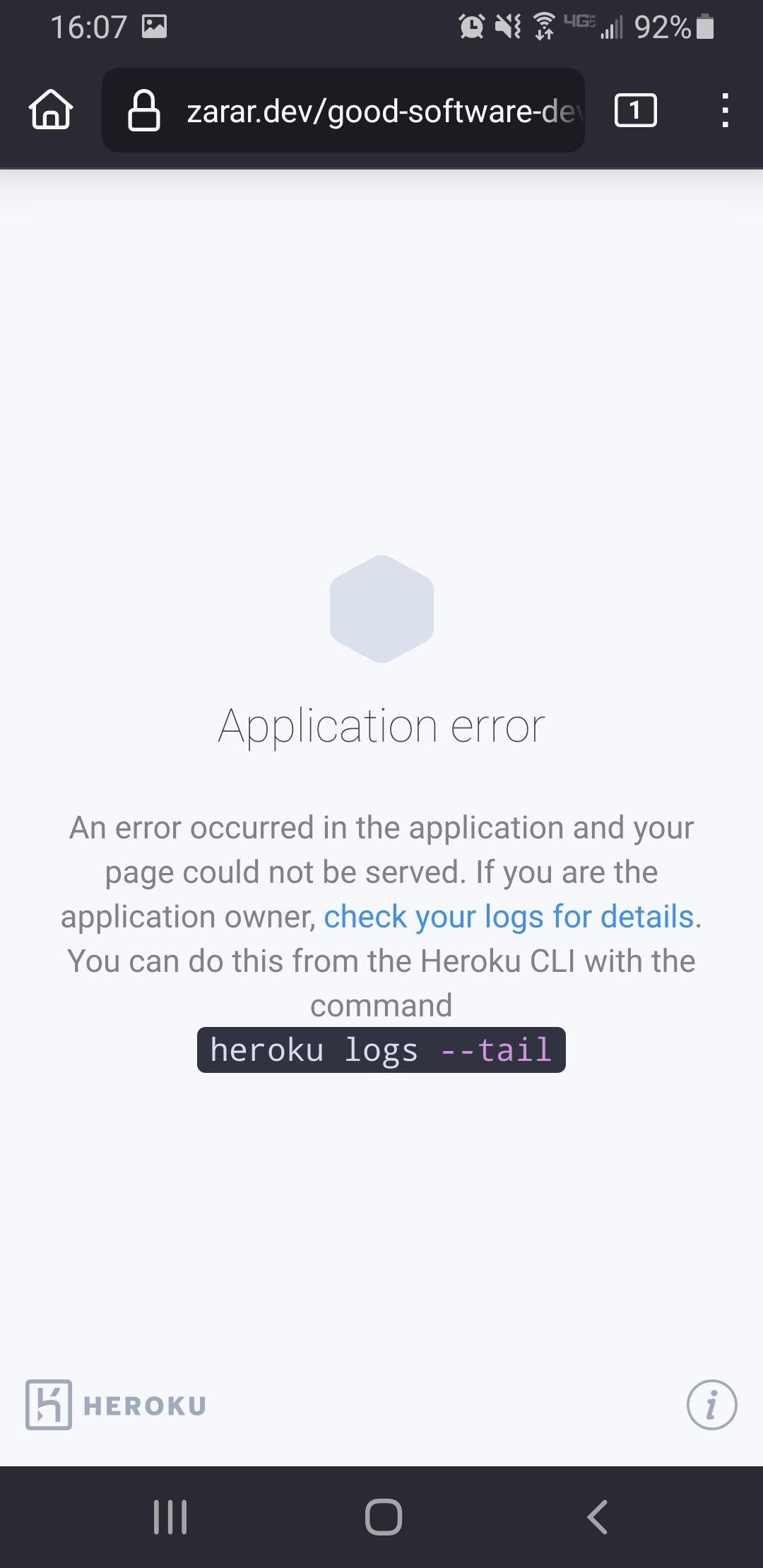

Tip #1: if you’re gonna post your programming blog to social media, make sure it can handle the traffic…?

The compiler is getting more and more parallel but there’s a few bottlenecks still. The frontend (parsing, macro expansion, trait resolution, HIR lowering) is still single-threaded, although there’s a parallel implementation on nightly.

Optimal core count really depends on the project you’re compiling. The compiler splits the crate into codegen units that can be processed by LLVM in parallel. It’s currently 16 for release builds and 256 for debug builds.

This theoretically means that you could continue to see performance gains up to 256 cores in debug builds, but in practice there’s going to be other bottlenecks.

Compilation is very memory and disk-I/O intensive as well. Having a fast SSD and plenty of spare memory space that the OS can use for caching files will help. You may also see a benefit from a processor with a large L3 cache, like AMD’s X3D processor variants.

Across a project, it depends on how many dependencies can be compiled in parallel. The dependencies for a crate have to be compiled before the crate itself can be compiled, so the upper limit to parallelism here is set by your dependency graph. But this really only matters for fresh builds.

I feel like if your body follows the Unix filesystem structure, you have a real problem.

It’s a glob pattern (edit: tried to find a source that actually showed ** in use).

That’s why you have backups.

sudo rm /heart/arteries/**/clot

I wonder why I haven’t seen a standard open-source license for this.

This would be a lot more readable with some paragraph breaks.

Any highlights from those in the know?

It was $5k worth of training, and well worth it, since you still remember the lesson.

Yep.

That’s also not the most money I’ve ever unintentionally cost an employer.

The applications I’ve built weren’t designed for serverless deployment so I wouldn’t know. It seems like you pay a premium for the convenience though.

I ran up like a $5k bill over a couple weeks by having an application log in a hot loop when it got disconnected from another service in the same cluster. When I wrote that code, I expected the warnings to eventually get hooked up to page us to let us know that something was broken.

Turns out, disconnections happen regularly because ingress connections have like a 30 minute timeout by default. So it would time out, emit like 5 GB of logs before Kubernetes noticed the container was unhealthy and restarted it, rinse and repeat.

I know $5k is chump change at enterprise scale, but this was at a small scale startup during the initial development phase, so it was definitely noticed. Fortunately, the only thing that happened to me was some good-natured ribbing.

It executes on a native thread in the background. That way it doesn’t stall the Javascript execution loop, even if you give it a gigabyte of data to hash.

I once had a spambot @ me and like 100 other people I didn’t know on a repo I’d never touched before.

I reported it for spam and got an email from Github Support within an hour notifying me that they had taken action.

Actually, Android doesn’t really use Dalvik anymore. They still use the bytecode format, but built a new runtime. The architecture of that runtime is detailed on the page I linked. IIRC, Dalvik didn’t cache JIT compilation results and had to redo it every time the application was run.

FWIW, I’ve heard libgcc-jit doesn’t generate particularly high quality code. If the AOT compiled code was compiled with aggressive optimizations and a specific CPU in mind, of course it’ll be faster. JIT compiled code can meet or exceed native performance, but it depends on a lot of variables.

As for mawk vs JAWK vs go-awk, a JIT is not going to fix bad code. If it were a true apples to apples comparison, I’d expect a difference of maybe 30-50%, not ~2 orders of magnitude. A performance gap that wide suggests fundamental differences between the different implementations, maybe bad cache locality or inefficient use of syscalls in the latter two.

On top of that, you’re not really comparing the languages or runtimes so much as their regular expression engines. Java’s isn’t particularly fast, and neither is Go’s. Compare that to Javascript and Perl, both languages with heavyweight runtimes, but which perform extraordinarily well on this benchmark thanks to their heavily optimized regex engines.

It looks like mawk uses its own bespoke regex engine, which is honestly quite impressive in that it performs that well. However, it only supports POSIX regular expressions, and doesn’t even implement braces, at least in the latest release listed on the site: https://github.com/ThomasDickey/mawk-20140914

(The author creates a new Github repo to mirror each release, which shows just how much they refuse to learn to use Git. That’s a respectable level of contempt right there.)

Meanwhile, Java’s regex engine is a lot more complex with more features, such as lookahead/behind and backreferences, but that complexity comes at a cost. Similarly, if go-awk is using Go’s regexp package, it’s using a much more complex regex engine than is strictly necessary. And Golang admits in their own FAQ that it’s not nearly as optimized as other engines like PCRE.

Thus, it’s really not an apples to apples comparison. I suspect that’s where most of the performance difference arises.

Go has reference counting and heap etc, basically a ‘compiled VM’.

This statement is completely wrong. Like, to a baffling degree. It kinda makes me wonder if you’re trolling.

Go doesn’t use any kind of VM, and has never used reference counting for memory management as far as I can tell. It compiles directly to native machine code which is executed directly by the processor, but the binary comes with a runtime baked in. This runtime includes a tracing garbage collector and manages the execution of goroutines and related things like non-blocking sockets.

Additionally, heap management is a core function of any program compiled for a modern operating system. Programs written in C and C++ use heap allocations constantly unless they’re specifically written to avoid them. And depending on what you’re doing and what you need, a C or C++ program could end up with a more heavyweight collective of runtime dependencies than the JVM itself.

At the end of the day, trying to write the fastest code possible isn’t usually the most productive approach. When you have a job to do, you’re going to welcome any tool that makes that job easier.

Yeah but Go has the best error handling paradigm of any programming language ever created:

ret, err := do_thing()

if err != nil {

return nil, err

}

Don’t you just love doing that every 5 lines of code?

Android has actually employed a hybrid JIT/AOT compilation model for a long time.

The application bytecode is only interpreted on first run and afterwards if there’s no cached JIT compilation for it. The runtime AOT compiles well-known methods and then profiles the application to identify targets for asynchronous JIT compilation when the device is idle and charging (so no excess battery drain): https://source.android.com/docs/core/runtime/configure#how_art_works

Compiling on the device allows the use of profile-guided optimizations (PGO), as well as the use of any non-baseline CPU features the device has, like instruction set extensions or later revisions (e.g. ARMv8.5-A vs ARMv8).

If apps had to be distributed entirely as compiled object code, you’d either have to pre-compile artifacts for every different architecture and revision you plan to support, or choose a baseline to compile against and then use feature detection at runtime, which adds branches to potentially hot code paths.

It would also require the developer to manually gather profiling data if they wanted to utilize PGO, which may limit them to just the devices they have on-hand, or paying through the nose for a cloud testing service like that offered by Firebase.

This is not to mention the massive improvement to the developer experience from not having to wait several minutes for your app to compile to test out each change. Call it laziness all you want, but it’s risky to launch a platform when no one wants to develop apps for it.

Any experienced Android dev will tell you it does kinda suck anyways, but it’d suck way worse if it was all C++ instead. I’d take Android development over iOS development any day of the week though. XCode is one of the worst software products ever conceived, and you’re forced to use it to build anything for iOS.

You know there’s nothing stopping you from buying a server rack and loading that bad boy out with as much processing power as your heart desires, right?

Well, except money I guess, but according to this 1969 price list referenced on Wikipedia, a base model PDP-11 with cabinet would run you around $11,500. Adjusted for inflation, that’s about 95 grand. You could put together one hell of a home server for that kind of money.

If your job can be replaced with GPT, you had a bullshit job to begin with.

What so many people don’t understand is that writing code is only a small part of the job. Figuring out what code to write is where most of the effort goes. That, and massaging the egos of management/the C-suite if you’re a senior.